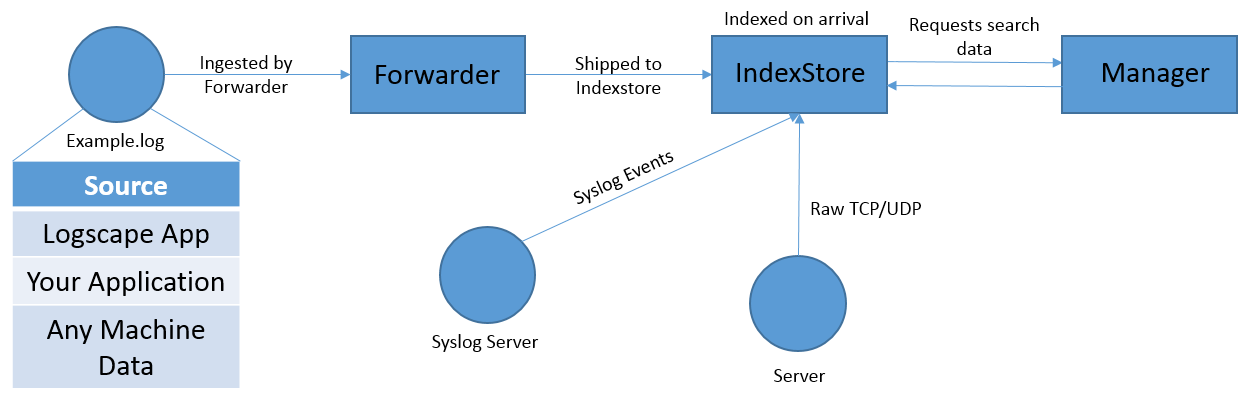

Looking at the above image you can see that when a forwarder discovers a file it will ship it to an indexerstore to be indexed, however it doesn't really explain the indexing process, we're going to shine some light on that.

When a log file is sent from a forwarder, through to an Indexstore, that file is again written to disk on the Indexstore, from there, it's picked up by the datasource the same as it would be on a manager. The file is then picked up as having changed by the 'Logscape Visitor', and a tailer is assigned. The tailer recognises when the file has been updated, rolled or deleted. An update occurs when a new event is written to the file, the visitor will extract the filename, path to file and the current position, this is added to the files database along with a unique file identifier. However the process doesn't stop there, once added to the files database, the event is passed to the 'Ingester', the ingester is responsible from actually breaking the event down, it begins by checking the settings of the file that come from the datasource, is it attempting to discover fields? should it look for a timestamp that includes milliseconds? is it looking to populate system fields? The ingester then breaks apart the event, storing all the discovered K:V pairs in a .idx file, which is simply named [uniqueIdentifier].idx.

Your data is now ready to be searched.

So to put that into steps.